PULSE POINTS

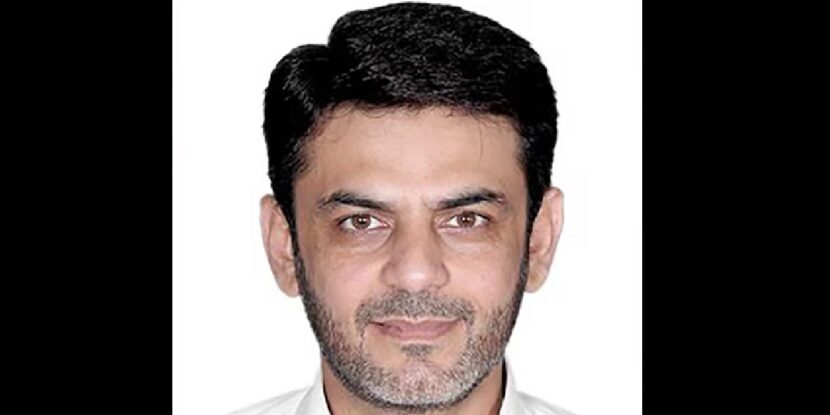

❓WHAT HAPPENED: Senator John Cornyn (R-TX) may be poised to receive President Donald J. Trump’s endorsement in a primary contest against Texas Attorney General Ken Paxton, despite the fact that he has repeatedly betrayed President Trump and the MAGA movement since 2016. The situation undermines Trump’s claims to value loyalty, and is infuriating his MAGA base in Texas and beyond.

Your free, daily feed from The National Pulse.

Thank You!

You are now subscribed to our newsletter.

👤WHO WAS INVOLVED: Senator John Cornyn, MAGA supporters, President Trump, and Ken Paxton.

📍WHEN & WHERE: Various instances from 2016 to 2026.

💬KEY QUOTE: “Who is a worse Senator, John ‘The Stiff’ Cornyn of Texas, or Mitt ‘The Loser’ Romney of Massachusetts (Utah?)? They are both weak, ineffective, and very bad for the Republican Party, and our Nation.” – Donald Trump, 2023

🎯IMPACT: Cornyn’s history is coming into sharp focus ahead of the Texas Senate primary run-off slated for May, and a much coveted Trump endorsement.

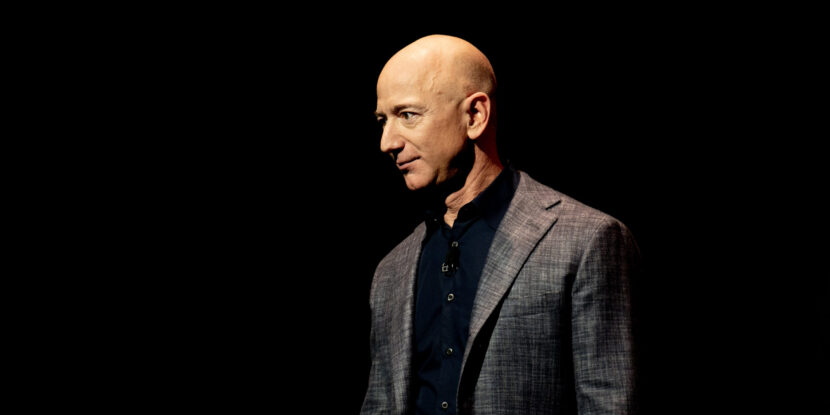

Republican Texas Senator John Cornyn, currently facing a primary run-off against Texas Attorney General and MAGA loyalist Ken Paxton, is reportedly on the cusp of receiving an endorsement from President Donald J. Trump, who will urge Paxton to drop out of the race. However, Cornyn has a long history of betraying Trump and scorning the MAGA base, leading many to wonder why the President would endorse him over a longtime ally like Paxton.

The matter is complicated further by the fact that Trump’s own 2024, including Chris LaCivita and Susie Wiles, are currently consulting for Cornyn, and creating an echo chamber around the President to limit him hearing from pro-Paxton voices.

Below, The National Pulse recalls six prominent examples of Cornyn backstabbing President Trump and his supporters:

1. Opposing Trump’s Border Wall.

Cornyn opposed President Trump’s border war as “naive” prior to his election in 2016, and boasted in 2020 that he had privately opposed his efforts to use national security funding to construct it after he entered office.

2. Refusing to Support Trump Against the Biden DOJ.

In July 2023, amid growing efforts by Joe Biden’s Department of Justice to have Trump indicted and potentially imprisoned, Cornyn declined to offer any support, saying, “I think that’s entirely within the purview of the Department of Justice and has nothing to do with the United States Senate.”

Asked if it would be “legitimate” for Jack Smith to charge Trump with attempting to overturn the 2020 election, he maintained a hands-off approach, insisting, “That’s going to depend on whether or not laws were broken. So clearly, I don’t know what they’re looking at. But I’m sure we’ll know in due time.”

3. Pushing Open Borders Policies.

After the Supreme Court ruled against the first attempt by the Trump administration to terminate DACA in 2020, Cornyn celebrated by taking to the Senate floor and saying, “DACA recipients must have a permanent legislative solution. They deserve nothing less. These young men and women have done nothing wrong.”

He continued by pushing amnesty for DACA illegals, declaring, “I believe the Supreme Court has thrust upon us a unique moment and an opportunity. We need to take action and pass legislation that will unequivocally allow these young men and women to stay in the only home, in the only country, they’ve known.”

4. Blaming Trump for January 6.

In the aftermath of the disorderly January 6, 2021, protests at the U.S. Capitol, Cornyn publicly denounced Trump for his “reckless and incendiary speech.”

Although he voted to acquit Trump during his Senate trial, he was scathing, about the President’s conduct, suggesting it would be his biggest legacy and arguing that “no consideration [was] given to what the impact would be on the people listening, no consideration given to the likelihood that there would be outside agitators trying to stoke the fire for the mob.”

5. Dissing Trump 2024:

In 2023, as Trump geared up for his 2024 run, Cornyn told reporters, “I think President Trump’s time has passed him by,” and urged the GOP to “move on” to a supposedly more electable candidate.

“I think what’s the most important thing for me is that we have a candidate who can actually win. I don’t think President Trump understands that when you run in a general election, you have to appeal to voters beyond your base,” the Texas Senator said.

Cornyn endorsed Trump only after the New Hampshire primary, when it became apparent that none of his rivals in the Republican primary had any hope of supplanting him.

6. No Recess Appointments.

During his failed run for Senate Majority Leader, Cornyn backed the use of recess appointments to quickly fill out Trump’s cabinet. However, just days later, he reversed course and told reporters, “Obviously, I don’t think we should be circumventing the Senate’s responsibilities, but I think it’s premature to be talking about recess appointments right now,” as he and his colleagues were blocking the appointment of former Congressman Matt Gaetz (R-FL) as U.S. Attorney General.

Join Pulse+ to comment below, and receive exclusive e-mail analyses.