❓WHAT HAPPENED: The Office of Management and Budget (OMB) ordered federal agencies to compile reports on funding provided to Democrat-controlled states in what appears to be a broader federal effort to investigate widespread social services fraud.

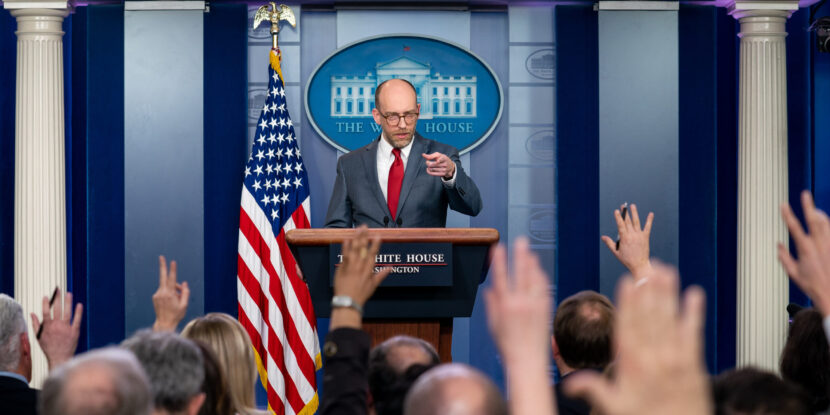

👤WHO WAS INVOLVED: The Trump White House’s Office of Management and Budget (OMB), OMB Director Russell Vought, federal agencies, and a number of Democrat-controlled states.

📍WHEN & WHERE: The memo was issued on January 20, with a deadline for agencies to submit data by January 28.

💬KEY QUOTE: “This is a data-gathering exercise only. It does not involve withholding funds, and therefore does not violate any court order.” – OMB memo

🎯IMPACT: The states slated for the data collection include California, Colorado, Connecticut, Delaware, Illinois, Massachusetts, Minnesota, New Jersey, New York, Oregon, Rhode Island, Vermont, Virginia, and Washington—along with Washington, D.C.

The Office of Management and Budget (OMB)—under the direction of the agency’s director, Russell Vought—has instructed nearly all federal agencies to compile detailed reports on federal funding directed to Democratic-led states. According to documents that surfaced on Thursday, the directive excludes the Pentagon and the Department of Veterans Affairs (VA).

Notably, the January 20 memo emphasizes that this is a “data-gathering exercise only” and states that it “does not involve withholding funds, and therefore does not violate any court order.” While the memo does not outright state the intent of the data-gathering, it is likely tied to ongoing investigations into widespread allegations of social services fraud and the misuse of federal funds in Democrat-controlled states.

The states slated for the data collection include California, Colorado, Connecticut, Delaware, Illinois, Massachusetts, Minnesota, New Jersey, New York, Oregon, Rhode Island, Vermont, Virginia, and Washington state, along with Washington, D.C. The reports are expected to detail federal funding to states and localities, universities, and nonprofit organizations operating within these jurisdictions. Importantly, the funds in question are appropriated by Congress, and the deadline for agencies to submit the requested data is January 28.

Allegations of the criminal misuse and fraudulent disbursement of taxpayer dollars have continued to mount after numerous social services fraud schemes were exposed in Minnesota, most of which have been linked to the state’s large Somali immigrant community. Similar accusations have arisen in Maine and California as well.

The National Pulse reported earlier this month that U.S. Treasury Secretary Scott Bessent deployed his department’s Financial Crimes Enforcement Network (FinCEN) to begin a geographic targeting operation for the Minneapolis-St. Paul area, applying extra scrutiny to all businesses engaging in overseas money transfers. In addition to the FinCEN geographic targeting order, Sec. Bessent announced that the Internal Revenue Service (IRS) will soon launch a task force charged with investigating instances of COVID-19 pandemic relief fraud and violations of 501(c)(3) tax-exempt status by nonprofits tied to the numerous Somali community-linked social services fraud schemes.

Join Pulse+ to comment below, and receive exclusive e-mail analyses.