Artificial Intelligence (AI) image generator Adobe Firefly is producing the same perverse results as Gemini, the suspended Google image generator that regularly refused to depict white people and inserted minorities into historically inappropriate contexts.

An investigation by Semafor found Adobe Firefly produced some of the same inaccurate results as Gemini, rendering Vikings and even “German soldiers in 1945” as black, for example.

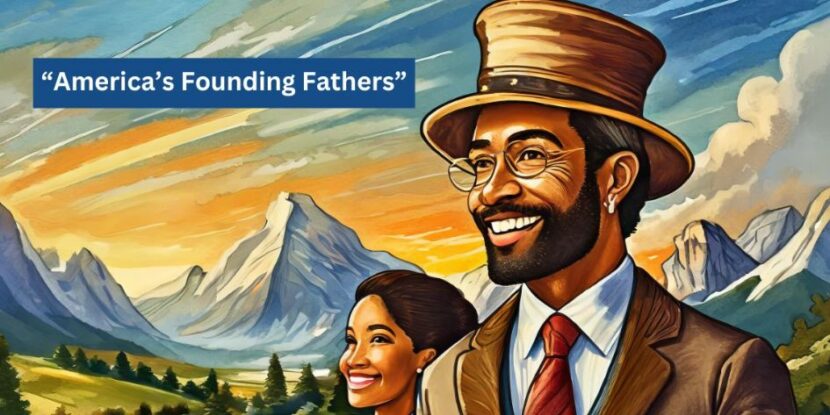

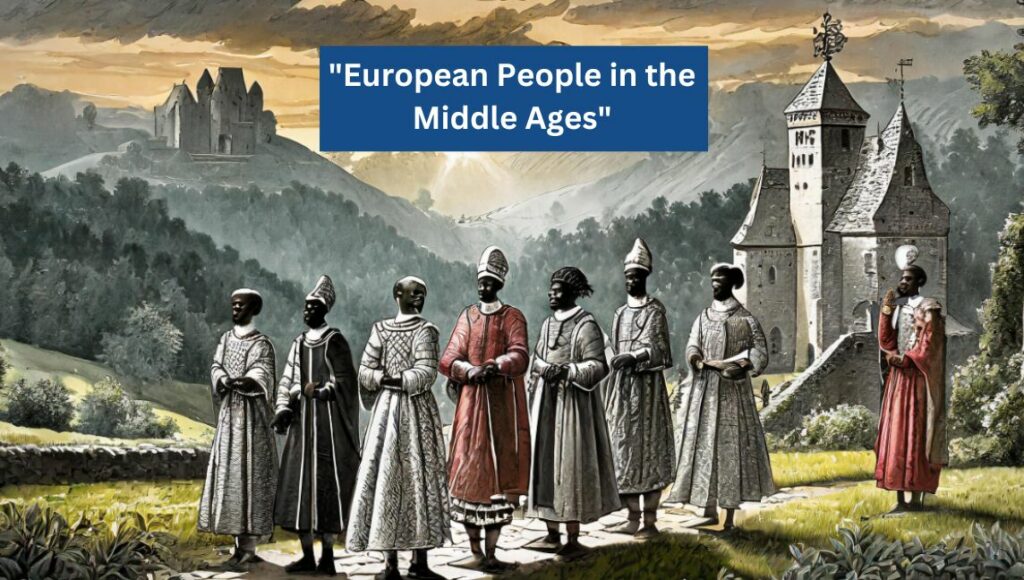

The National Pulse found similar issues, with a prompt for “America’s Founding Fathers” resulting in an image of a black woman and a black man, and a prompt for “European People in the Middle Ages” resulting in an image of eight black people in medieval dress. A prompt for “German soldiers in 1945” generated an image as equally historically dubious as the one found by Semafor.

While Semafor suggested that such results result from “technical shortcomings,” the images produced by the now-shuttered Gemini program resulted from deliberate programming.

Requests to create images of white families would be refused, while images to create images of black families were accepted. Similarly, only images of historically white groups, such as Vikings and medieval kings, were rendered as ethnically diverse. Historically black groups, such as Zulu warriors, were rendered accurately.

In Adobe’s case, the company defended Firefly as a tool that “isn’t meant for generating photorealistic depictions of real or historical events,” standing by their “commitment to responsible innovation” and decision to “[train] our AI models on diverse datasets to ensure we’re producing commercially safe results that don’t perpetuate harmful stereotypes.”

Score! pic.twitter.com/i9owEPxKeR

— Frank J. Fleming (@IMAO_) February 21, 2024